Can computers get as good at recognising speech as humans? Odette Scharenborg (EEMCS) hopes so. She is using different techniques to improve automatic speech recognition.

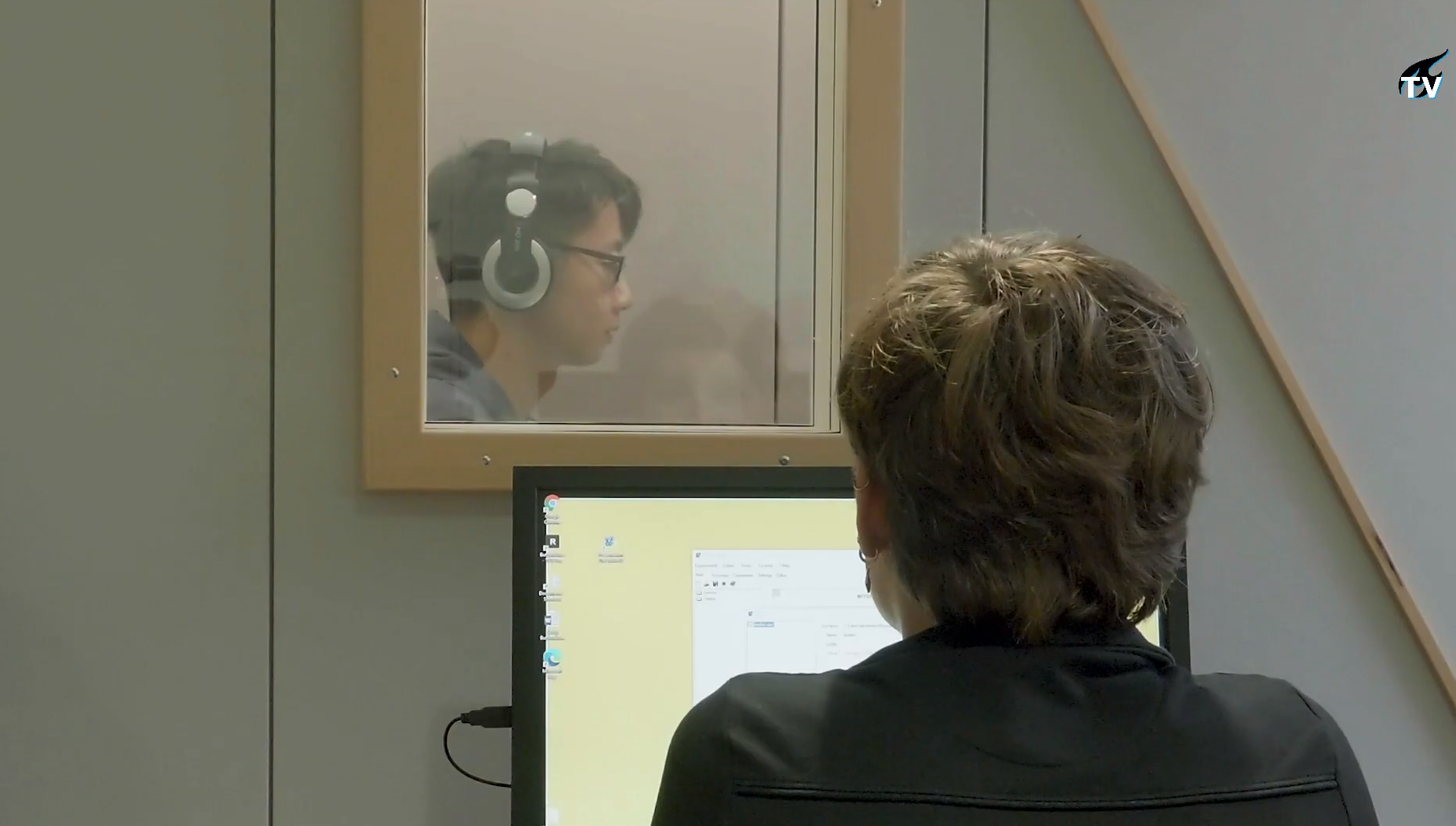

Odette Scharenborg conducting an experiment. (Screenshot: TU Delft TV)

Did you know humans are way better at recognising speech than computers? That’s because current speech recognition systems are only created for people with ‘normal’ voices and for about 2% of the languages in the world, explains Associate Professor Odette Scharenborg (Faculty of Electrical Engineering, Mathematics and Computer Science).

Scharenborg works at the TU Delft speech recognition lab where she looks at how humans process speech and adapt to non-normal speech. By doing this, she hopes to find out whether it is possible to replicate the same type of flexibility to automated speech recognition systems (ASR). ASR systems automatically transform a speech signal into a written transcript. This technology is extensively used in mobile phones and laptops for a range of different tasks. Think of asking Siri, Apple’s virtual assistant, to play a song on Spotify without you opening the application.

- TU Delft TV visited the TU Delft speech recognition lab. You can watch the short documentary below.

Experiments

“The types of experiments that we run are twofold,” Scharenborg explains. “On the one hand we are looking at what humans are actually doing when they are listening to speech. Humans are very fast at learning new languages and computers are not that fast.” On the other hand, she is also researching the differences and similarities in speech recognition by humans and computers. Humans, for instance, are very good at adapting to non-standard speech. “We are looking into what it is that humans do so that we can improve our automatic speech recognition systems.” To do this, Scharenborg uses various research techniques ranging from human listening experiments, eye-tracking, EEG, and computational modelling to deep neural networks.

“How humans process speech in their deep neural network shows the flexibility with which humans can actually learn new languages and can adapt to deviant speech. And it shows what it does to the actual sound representations in our human brains,” explains Scharenborg. “Deep neural networks show whether the same type of flexibility can be implemented in automated speech recognition systems.” • Find out more about Odette Scharenborg’s research and the TU Delft speech recognition lab here.

- Find out more about Odette Scharenborg’s research and the TU Delft speech recognition lab here.

TU Delft TV / TU Delft TV is a collaboration between Delta and the Science Centre. The crew consists of TU Delft students.

Comments are closed.